What is A/B testing

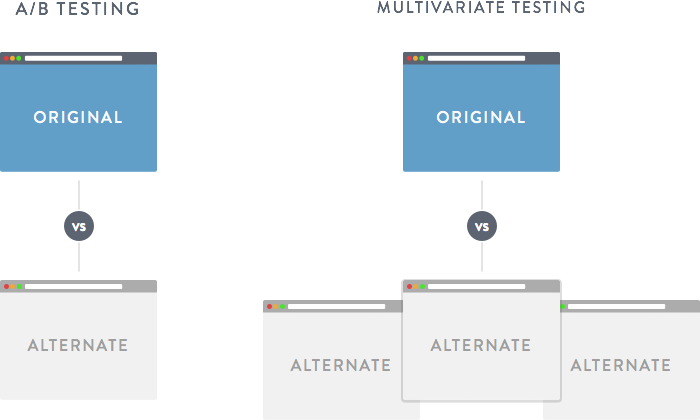

A/B testing is essentially an experiment where two or more variants of a page are shown to users at random, and statistical analysis is used to determine which variation performs better for a given conversion goal. It is also known as bucket testing and split-run testing. A/B testing is a way to compare two versions of a single variable, typically by testing a subject’s response to variant A against variant B, and determining which of the two variants is more effective.

Why A/B testing:

A/B testing allows individuals, teams, and companies to make careful changes to their user experiences while collecting data on the results. This allows them to construct hypotheses, and to learn better why certain elements of their experiences impact user behavior. In another way, they can be proven wrong—their opinion about the best experience for a given goal can be proven wrong through an A/B test.

Version A might be the currently used version (control), while version B is modified in some respect (treatment). For instance, on an e-commerce website the purchase funnel is typically a good candidate for A/B testing, as even marginal decreases in drop-off rates can represent a significant gain in sales. Significant improvements can sometimes be seen through testing elements like copy text, layouts, images and colors, but not always. In these tests, users only see one of two versions, as the goal is to discover which of the two versions is preferable.

A B2B technology company may want to improve their sales lead quality and volume from campaign landing pages. In order to achieve that goal, the team would try A/B testing changes to the headline, visual imagery, form fields, call to action, and overall layout of the page

How A/B Testing Works

Construct your hypothesis

A hypothesis is a proposed explanation for a phenomenon, and A/B testing is a method for determining whether or not the hypothesis is true. The hypothesis may arise by examining existing data, it may be more speculative, or it may be ‘just a hunch.’ (The ‘hunch’ hypothesis is particularly common for new features that enable new metrics.) In the navigation example, the hypothesis could be articulated in this way: ‘Switching to bottom navigation instead of tabs will increase user engagement’. You can then use this hypothesis to inform your decision about what, if any, change to make in the navigation style of your app, and what effect that change will have on user engagement. The important thing to remember is that the only purpose of the test is to prove that bottom navigation has a direct, positive impact on average revenue per user (or ARPU).

What to test (What is A? What is B? Etc.)

The following table outlines broad scenarios that can help you determine how to choose what variants to test. I’ll use our hypothetical navigation experiment as an example.

Allocate Traffic

If the hypothesis is true, and the new variant is preferred to the old, the ‘default’ configuration parameters being passed back to the app can be updated to instruct it to use the new variant. Once the new variant has become the default for a sufficient amount of time, the code and resources for the old variant can be removed from the app in your next release.

Incremental rollout

A common use case for A/B testing platforms is to re-purpose them as an incremental rollout mechanism, where the winning variant of the A/B test gradually replaces the old variant for all users. This can be viewed as an A/B design test, whereas the incremental rollout is a Vcurr/Vnext test to confirm that the chosen variant causes no adverse effects on a larger segment of your user base. Incremental rollout can be performed by stepping up the percentage of users (for example, you can go from 1%, 2%, 5%, 10%, 20%, 25%, 50%, 100%) receiving the new variant and checking that no adverse results are observed before advancing to the next step. Other segments can be used as well, including country, device type, user segment, etc. You can also choose to roll out the new variant to specific user groups (e.g., internal users).

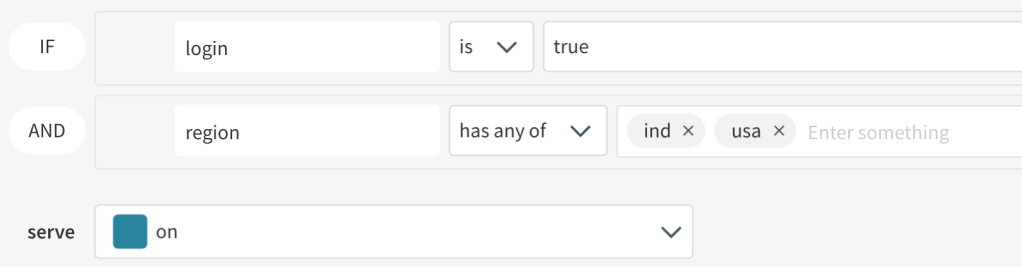

Targeting Rule

Target specific subsets of your customers based off of any attribute you want. (e.g. location or last-login date) These subsets will be placed into a specific treatment or we’ll take these subsets and randomly distribute customers in that subset between your treatments based off of percentages you decide.

How to test

Now that you understand what and where you’ll test, it’s down to how. There are numerous applications that enable A/B testing. Some of the more popular options:

- Split

- Google Analytics

- Optimizely

- Visual Website Optimizer

- Adobe Target

- Evergage

- Crazy Egg

The Principles of A/B Testing:

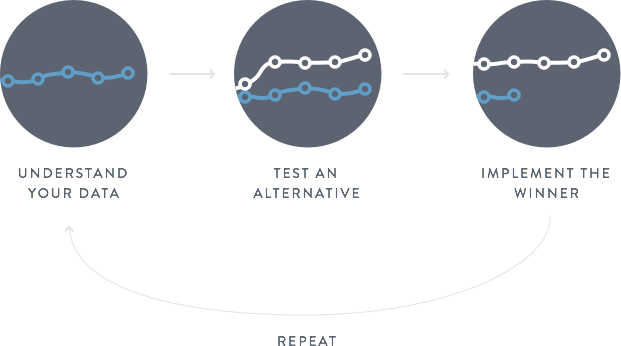

- Rake in and rank continuously:

While everything can be tested, one needs to thoroughly evaluate and prioritize experiments that have a high potential impact on the key business metric in order to accelerate product optimization.

Experiment’s Impact = Users Impacted x Needle Moved

The potential impact, along with the development effort, together drives the decision of picking experiments. The more objective the process the better. - Refine and repeat:

Insights from most experiments drive newer hypothesis and further testing. The success of the new FAB icon can incite further testing of different icons, colors, button type, communication mechanisms, etc. Which ones do we prioritize depends solely on strength of hypothesis and implementation effort. - Run Controlled Experiment:

The outside world often has a much larger effect on metrics than product changes do. Users can behave differently depending on the day of the week, season of the year, or even the source of the app install. Controlled experiments isolate the impact of product change while controlling the influence of external factors. It’s important to run the experiment on users under identical conditions until sufficient number of data points are attained to gain statistical significance . - Put Mission in Perspective:

While A/B testing is the path to product optimization, the only thing that trumps metrics is Mission. Keeping a view of the long-term mission and direction of the company prevents the product roadmap and optimizations to move in a direction that could inevitably require future course correction.

Beyond that, though, cracking the code of A/B testing is synonymous with building a world-class product.

Understand Segments

Over what segment are you going to run your test?

Business segments which product types or markets? which devices? which users?

Rather than looking at this from a simple testing perspective consider:

Operational constraints can take the findings in the tested traffic, roll them out to the business at large and keep a well maintained codebase. Nobody will be able to manage the complexity of running a different version of the code for every different product line.

Reporting constraints how dow we report uplift from testing to the business? What is it worth over the course of the year and how will this be reconciled with budget and financing and forecasting? Web development costs money and is a seriously important reason to report uplift with KPIs.

Technical constraints your split tests probably depend on cookies. They might depend on users. If you can’t get the data, clean it up and report on the conversion, you can’t run the test. You need the infrastructure to segment your traffic — if you can’t pick out users with 2 or more items in their shopping cart, you can’t test this segment.